-

Decouple Your Powershell Modules Using Events

(Source Code is available in this GithHub repository)

Powershell Modules

When building a code base of any language, we want to keep the code organised in encapsulated modules. This enable the code to be testable, maintainable and reusable

Powershell offers several ways of organising the code, but the most used is to organise them in Modules. A module in Powershell can include a Manifest file that declares (among other details) the Modules that this Module depends on. This dependencies will be pre-loaded when the target Module is loaded.

For .Net development, this is similar to dependencies declared in NUget packages. The Manifest

.psd1file is comparable to the.nuspecfile in NUget.Why decoupling?

Even with a tidy organisation of Modules, code reuse and modularity start to show their ugly face: dependency hell

In particular, this article is concerned with two of the problems identified as a side effect of modularity:

-

Many dependencies

-

Long chain of dependencies

Too many (unwanted) dependencies loaded

First attempts at code modularisation do, at some point, fall short in specificity.

A module that deals with, for example, deploying an application to an environment, could end up loading modules that are not needed for that specific use case.

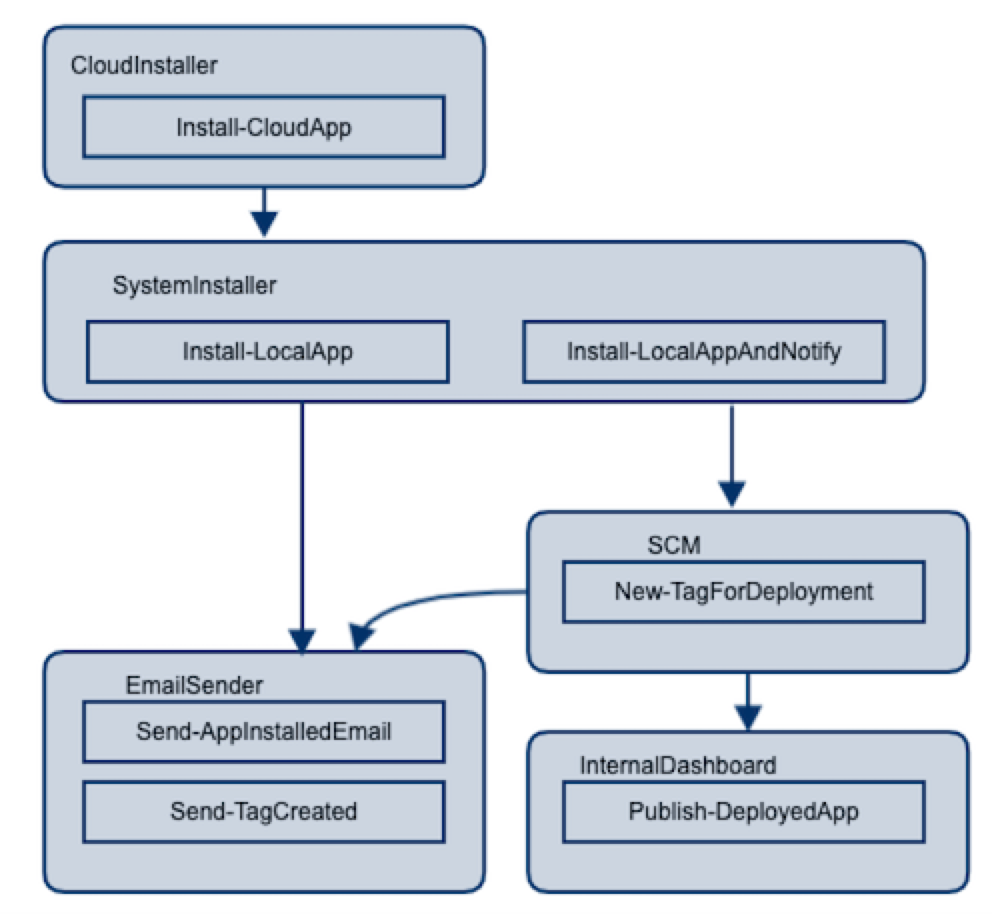

figure 1 - Diagram of highly coupled modules

For example, figure 1 shows a 4-tier hierarchy of dependencies between modules.

The module

SystemInstallerinstalls software in the current machine (cloud or on premises). One commandlet in this module needs to tag the source code when software is ready to be tested. When this happens, theSCMmodule updates an internal Dashboard.Loading

SystemInstallerwill also loadSCM,InternalDashoardandEmailSendermodules. But if we need to just callInstall-LocalAppwe will only needEmailSender.These modules may be used in different occasions and scenarios. The combination of these use cases will very likely result in highly coupled modules like in this example, or sometimes much worse.

Long chain of dependencies

Another side effect of loading unwanted modules is that we will also load the dependencies of these unwanted modules.

As time passes, more dependencies are loaded to these transitive dependencies and….one day we find out that loading one module ends up loading 20 modules, of which we only need one or two.

This takes up time consumes resources…and it can have unwanted side effects in your environment, such as installing software or writing to the file system.

Decoupling Powershell modules with Events

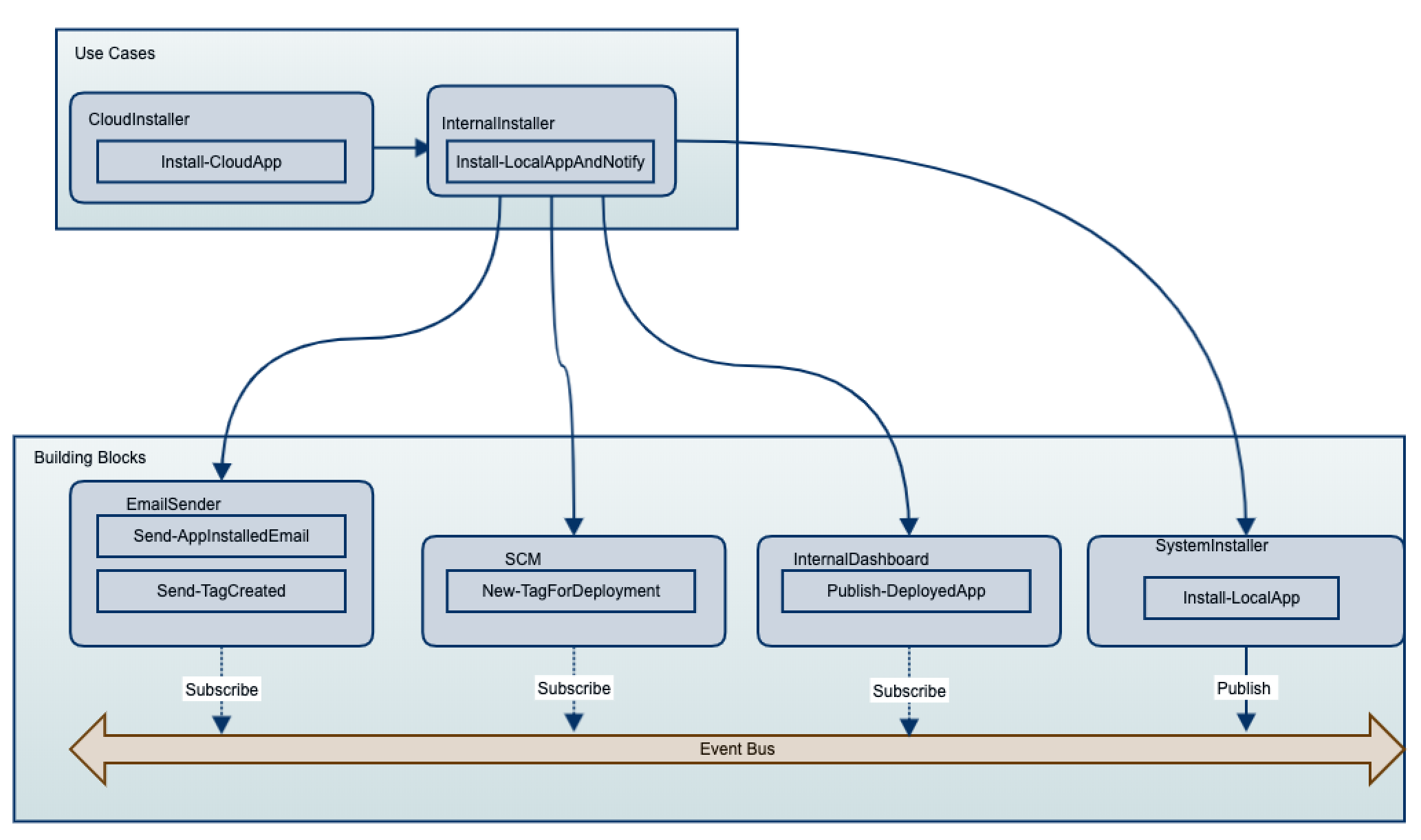

A better module organisation is to have a "flatter" hierarchical structure. The top-level modules represent specific use cases, where the lower level are the building blocks.

Developers can use the building blocks provided they use

get-helpand understand the extent of a module.Here is a representation of a flat hierarchy of modules for the previous graph.

In this model, Top Level modules can be thought as mediators of the building blocks. They represent the top level use case that knows what are the different building blocks in place.

Dealing with Email Servers, Source Control Management Systems and Dashboards are all independent of each other. Use cases that need one of these libraries, will import the modules and "wire" them together in a publish-and-subscribe approach.

Powershell support for events is neither widely used nor documented, but the language provides the building blocks necessary to implement such pattern.

Implementation: Using events to decouple modules

Building blocks

The

EmailSenderandInternalDashboardmodules have no dependencies. Their input parameters can be simple primitives like strings or integers. This make these building block modules very easy to test and develop.EmailSender.psm1function Send-TagCreatedEmail($tag,$to){ write-host "[EmailSender]**** Sending Email to $to. Message: 'CREATED TAG $tag' ****" }InternalDashoard.psm1function Publish-DeployedApp($appId,$deploymentId){ write-host "[InternalDashboard]***** Publishing Deployment $deploymentId to dashboard ******" }Event Publishers

SystemInstallerandSCMmodules are also building blocks with no dependencies. Hence, they are also easy to develop, test and reuse.These two modules also publish events when their goal is completed. The events are called

AppInstalledandTagCreatedrespectively.SystemInstaller.psm1function Install-LocalApp($appId){ $deploymentId = Get-Random -Maximum 100 write-host "[SystemInstaller]***** Intalling app with id $appId. Deployment Id: $deploymentId" New-Event -SourceIdentifier AppInstalled ` -MessageData @{appId = $appId; deploymentId= $deploymentId}|out-null }SCM.psm1function New-TagForDeployment($deploymentId){ write-host "[SCM]**** Creating Tag for deploymentId $deploymentId******" New-Event -SourceIdentifier TagCreated ` -MessageData @{Tag = "DEPLOYED/$deploymentId"}|out-null }Both modules are raising an event to the Powershell’s internal

event bus. This is done using New-Event Powershell cmdlet, which is only available from version 6.0.Events wouldn’t be too useful without additional context data. But this means that subscribers to the event need to know how to parse this data, specially when the values are complex types. This is also a type of coupling, which is why is a good idea to keep events with little data and simple structure.

Event Subscriber

Finally, the top level script will load all building block modules and "connect" their events from some of them to the commands in others.

We need to setup three event listeners:

-

When

AppInstalledoccurs, we publish the news to theInternalDashboard -

Also, we need to tag the source code using the

SCMmodule -

Finally, when the

SCMmodule publishesTagCreated, members of the Test teams need to be notified by email, so that they can start testing.

Adding subscribers to Powershell events is done with the Register-EngineEvent commandlet.

Register-EngineEventdiffers fromRegister-ObjectEventin that the latter allows listening for events raised by a .net object.The former allows listening for events raised within Powershell.

After wiring these events, we proceed with the action: install an application locally.

InternallInstaller.psm1Import-Module SCM Import-Module InternalDashboard Import-Module EmailSender Import-Module SystemInstaller function Install-LocalAppAndNotify($appId){ # When App is Installed, Publish to the Dashboard Register-EngineEvent -SourceIdentifier AppInstalled ` -Action { InternalDashboard\Publish-DeployedApp ` -appId $event.messagedata.appId ` -deploymentId $event.messagedata.deploymentId }|Out-Null # ... and create a tag in source control Register-EngineEvent -SourceIdentifier AppInstalled ` -Action { SCM\New-TagForDeployment ` -deploymentId $event.messagedata.deploymentId }|Out-Null # Email testers when the tag is created Register-EngineEvent -SourceIdentifier TagCreated ` -Action { EmailSender\Send-TagCreatedEmail ` -tag $event.messagedata.Tag ` -to 'Testers@myorg.com' }|Out-Null # Now install the application write-host "[InternalInstaller]**** Starting Installation " SystemInstaller\Install-LocalApp -appId $appId #Do not forget to remove event listeners!! Get-EventSubscriber| Remove-Event }When we run this top level script, the end result is as desired. we execute it this way:

$env:PSMODULEPATH = $pwd Import-Module InternalInstaller Install-LocalAppAndNotify 42This is the console output. Notice that the event publish and subscribe pattern is running synchronously

[InternalInstaller]**** Starting Installation [SystemInstaller]***** Intalling app with id 42. Deployment Id: 60 [InternalDashboard]***** Publishing Deployment 60 to dashboard ****** [SCM]**** Creating Tag for deploymentId 60****** [EmailSender]**** Sending Email to Testers@myorg.com. Message: 'CREATED TAG DEPLOYED/60' **** -

-

Find Jobs In All Jenkins Folders

Sometimes it is useful to list or apply an operation to a Jenkins job based on a regular expression. The Jenkins DSL plugin, which executes Groovy code within Jenkins, is probably the easier way to achieve this.

I was able to find code snippets in my first web search. However, all the solutions I found had a major limitation: not dealing with Jenkins folder.

In case you have not used them yet, Jenkins folders is a great plugin that let you organise your jobs into "container" folders, just like you would do on a file system.

Searching recursively

The following Groovy snippet will return a list of jobs across all folders. Optionally, you can pass a regular expression to filter out jobs by name. This regulare expression will be matched against the full name of the job, that is, including folder names. This allows you, for example, to find all unit tests jobs in a folder.

def isFolder(abstractJob){ return abstractJob.getClass().getName() == "com.cloudbees.hudson.plugins.folder.Folder" } def collectJobRecursively(abstractJob,resultCollector){ if(isFolder(abstractJob)) { abstractJob.items.findAll().each{ nestedJob -> collectJobRecursively(nestedJob,resultCollector) } } else { //only add actual jobs to the list resultCollector << abstractJob } } def findAll(pattern = '.*'){ def resultCollector = [] Jenkins.instance.items.findAll().each{ job -> collectJobRecursively(job,resultCollector) } return resultCollector .findAll{ // apply the regular expression here. // we are matching the Full Name (including folder names) (it.fullName =~ /$pattern/) } }Using the recursive findAll function

You can now use the previous

findAllfunction to search for all unit test jobs in a folder called "master_branch":findAll(".*/master_branch/.*UnitTest.*") .each{ job -> if(job.lastBuild){ println job.fullName + ':' + job.lastBuild.result } else { println job.fullName + " has not run yet"; } } -

Delete Stale Git Branches With Powershell

With the increasing adoption of source code management workflows such as Git Flow, it is common to keep "stale" branches in local repositories.

Steps to reproduce

The steps needed to end up in this situation are more or less like this:

-

Dev1 creates & publishes the branch Feature1

git checkout -B Feature1 ..make & commit changes.. git push -u origin Feature1

-

Dev2 fetches the branch to collaborate or review

git fetch git checkout Feature1

-

Dev2 deletes the Feature1 branch after merging it into master

# delete the branch on the remote git push origin --delete Feature1 # delete the branch locally git branch -D Feature1

Pruning stale references

At this stage, Dev1 (and 3, 4 etc) will want to have the deleted branch gone from their workspace too.

A quick search will lead you to the

prunesub command$> git remote update origin --prune Fetching origin From https://github.com/sebastianslutzky/TestRepo x [deleted] (none) -> origin/Feature1

However, this command only removes the reference to the tracking branch, not the local branch itself. You can see that the orphan branch is still here:

$> git branch -l Feature1 * master

Delete branches not in the remote

So here is a simple Powershell script that deletes the orphan local branch:

1 2 3 4 5 6 7

$local=git branch -l $remote=git branch -r $local| %{$_.Trim()}| ?{-not ($remote -like '*' + $_) }| ?{-not($_ -match "master" )}| %{git branch -D $_}

Surely this code could be compressed in one single piped command, but it could turn a bit confusing.

We are getting the list of local and remote branches (lines 1 & 2). Then we perform a substraction set operation ($local - $remote) (line 5). This will gives us the set of local branches not in the remote local branches not in the remote.

We finally delete them in line 7.

-

-

Take control of your Web API aspects

Action filters are a way of modifying or decorating the execution of one or more action methods. They provide points of execution before and after the action method itself executes.

For more details on action filters see this article

Order of Execution: Salami Slice + Russian Doll

You can have action filters declared at three levels: globally, controller-wide or action-specific. They will execute in that order…sort of.

Once ordered, action filters are executed sequentially, without connection between them.

But here’s the fun bit, the order of execution is reverted when the response is processed. In other words, if when processing the request the order of filters is

then when processing the response the order will be

there are some design patterns like:

-

I prefer to use the more visual Salami Slice for the sequential execution.

Overriding a global filter from an action method

This default order is convenient in many cases, but not always. I found myself in the following scenario: I needed to execute a default action filter unless an action was annotated with an overriding action filter attribute.

Two examples when this could be useful:

-

Always log http traffic, except sensitive data:

-

Log the raw http request and response to all action methods.

-

But: when the request is for PUT /PaymentCardDetails, then use a custom logger that will not log the payment card number and security code.

-

-

Always require authentication tokens, except Login and Forgot Password methods:

-

Add a global action filter that will always require authentication token in the request’s header.

-

But: annotate the Login and ForgotPassword action methods with an attribute that will bypass the authentication requirement.

-

…so here is the implementation:

Step 1: Create an interface for overriding attributes

Something like this is the simplest thing that can possibly do the job:

//To be implemented by actionfilterattributes that override a global action filter public interface IOverridingFilterAttribute { //The type of actionfilter to be replaced for this request Type OverriddenType { get; } }Step 2: Create your own ActionFilterProvider with the overriding behaviour

IFilterProvider is a component you can customise. It defines what action filters to use in this request, and in which order

public class OverridingFilterProvider : IFilterProvider { //called by WebApi on each request, in order // to get the list of filters to apply public IEnumerable<FilterInfo> GetFilters(HttpConfiguration configuration, HttpActionDescriptor actionDescriptor) { var globalFilters = configuration.Filters; var controllerFilters = actionDescriptor.ControllerDescriptor.GetFilters().Select(x => new FilterInfo(x,FilterScope.Controller)); var actionFilters = actionDescriptor.GetFilters().Select(x => new FilterInfo(x,FilterScope.Action)); //we'll apply global,controller-wide //and then action attribute filters var all = globalFilters.Concat(controllerFilters).Concat(actionFilters); return RemoveAnyOverriddenFilter(all); } //if there are IOverridingFilterAttributes, //remove the filters they override private IEnumerable<FilterInfo> RemoveAnyOverriddenFilter(IEnumerable<FilterInfo> all) { var overridingFilters = all.Where(x => (x.Instance as IOverridingFilterAttribute) != null) .Select(x => (IOverridingFilterAttribute) x.Instance); var filtered = all.Where(x => !IsOverridden(x, overridingFilters)); return filtered; } private bool IsOverridden(FilterInfo target, IEnumerable<IOverridingFilterAttribute> overrides) { foreach (var overridingFilter in overrides) { var filterType = target.Instance.GetType(); var overriddenType = overridingFilter.OverriddenType; if (overriddenType.IsAssignableFrom(filterType)) return true; } return false; } private IEnumerable<FilterInfo> OrderFilters(IEnumerable<IFilter> filters, FilterScope scope) { return filters.Select(instance => new FilterInfo(instance, scope)); } }Step 3: Register your Filter Provider

public class WebApiApplication : HttpApplication { protected void Application_Start() { var services = GlobalConfiguration.Configuration.Services; services.Replace(typeof(IFilterProvider), new OverridingFilterProvider()); } } -

Naked Objects Is Opensource

The Naked Objects framework for .Net is now open sourced under Microsoft Public License.

The projects is now hosted at Codeplex

You can download the framework from there or in Visual Studio, from NuGet